Table of contents

- Usability tests: basic principles

- The history of usability

- Defining usability

- Differences between usability and UX

- Benefits of usability tests

- Limitations of usability tests

- Conducting usability tests in practice

- Usability test: basic considerations

- Conducting usability tests: remotely or on site?

- Usability evaluation: Formative, summative or validative?

- Distribution of roles – Who coordinates the tests?

- Stakeholders – Who is present for the test?

- Which methods are suitable for which phase?

- Recruiting test subjects

- How many test subjects do I need?

- Selecting test subjects

- Preparing the usability test

- The test concept/script

- The best way to involve stakeholders/observers

- Evaluating the usability test

- What’s the best way to evaluate your results?

- Documenting insights

- In summary – usability testing that pays off

When I did my first tests, 17 years ago now, I still had to do a lot of work to convince people. I had to explain first of all what usability was. Today, by contrast, every decision-maker knows that usability is key to the success of any product. And that usability tests are crucial to that success.

In my experience, those who have never done usability tests themselves now have one of two views:

- That’s much too complex for us.

- Nice and easy, everyone can do it.

Both views are sort of right, and sort of wrong. You’ll find out why below.

1. Usability tests: Basic principles

The history of usability – From punch cards, mainframes and PCs to smartwear and the Internet of Things

The term ‘usability’ has only been around since the 1980s. But how people handle machinery came up long before that, of course. By the advent of heavy machinery such as steam engines, aeroplanes and power plants and a number of terrible accidents caused by operating errors, it was clear: People don’t always use devices the way their inventors imagine.

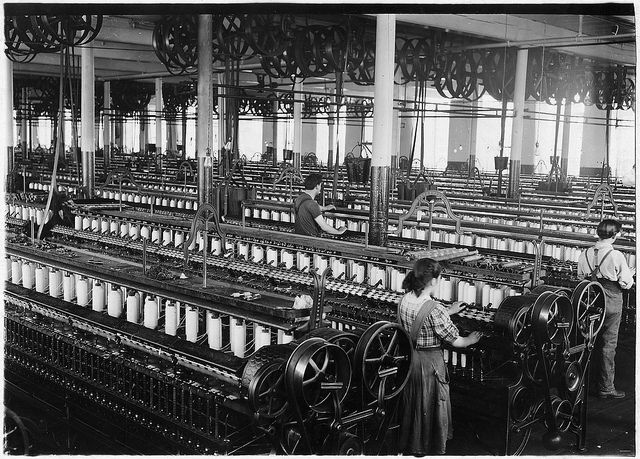

Equipment like this heavy spinning machine from 1912 constituted a major risk of injury for employees – everything had to run smoothly when it was operated.

Equipment like this heavy spinning machine from 1912 constituted a major risk of injury for employees – everything had to run smoothly when it was operated. In the 1970s, when mainframes and punch cards were still in use, there were some people around the globe dealing with human-computer interaction. With the arrival of the personal computer, usability became more and more important. It was no longer just a small group of well-educated technicians and engineers who worked with computers, but an increasingly vast public.

The first user tests took place back in 1947 – when the telephone keypad was developed at Bell Laboratories. It’s still in use today. And since the 1980s, usability tests have been used increasingly frequently. With every new technical device we surround ourselves with, they become more important. Virtual assistants, smartwear and the Internet of Things will not change that – on the contrary. Our colleague Jeff Sauro has a wonderful history of usability in English: A brief history of usability

Defining usability – What actually is usability?

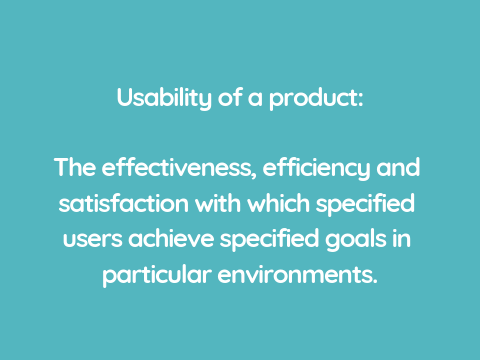

Almost everyone describes usability as ‘user-friendliness’. That’s not quite correct, however. An accurate synonym would be ‘serviceability’. But that sounds so cumbersome that hardly anyone uses the term – and the difference is academic, so that’s fine. Anyone looking for a really accurate definition of the terms should visit Wikipedia. Usability means that something can be used

- effectively,

- efficiently and

- satisfactorily.

In other words: I can achieve my goal using the application (effective). It takes as little effort as possible (efficient). And I’m happy with the action and the result (satisfactory). You could write whole scientific theses about the details of the definition, but that’s not necessary for the day-to-day. Crudely speaking, usability is simply what makes using something easy and convenient.

Definition of usability according to DIN EN ISO 9241

Differences between usability and user experience?

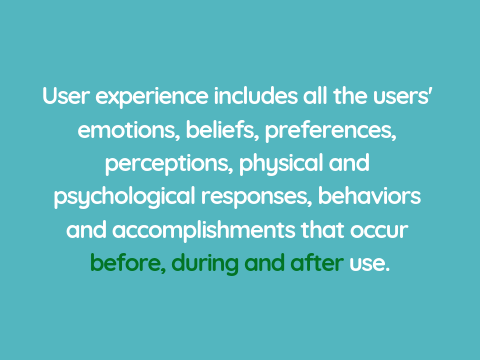

The line between usability and user experience (UX) is another one of those things. It exists in theory, but barely in practice. At the usability level, I’m already interested in whether the customer was satisfied. With UX we take it a step further: We also examine what the user did before they interacted with our product. And what they do afterwards. Whether they’ve enjoyed the whole ‘experience’. Nowadays, in a usability test, you usually also take into account aspects of UX. But most people still only talk about usability tests.

User experience involves much more than usability.

Benefits of usability tests

Usability tests involve setting aside your own opinion and seeing how other people handle an application. That way, you notice very quickly what works and what doesn’t. You can see which problems users have and what they understand straight away. You might also see approaches or possible uses that surprise you. In any case, every user test takes you a step further. You move away from your gut feeling towards empirical bases for improving the application. And you move away from discussions in the team based on assumptions about which solution is better, which order is more logical or what ‘goes down better’ in general.

Limitations of usability tests

Usability tests do have a large number of benefits – however, there are a few areas where you are better served by other methods. For example, when it comes to little graphic details or wording. These usually have such a minimal impact on usability that you would need huge numbers of participants to establish differences here. A/B tests, in which you test two different design versions live on the website, for example, are a better option here. In these tests, you can survey hundreds or thousands of users – an unrealistically high number for usability tests. It’s also problematic if you don’t have anything you can test yet.

So, for example, if there are only a few mock-ups, i.e graphic sketches, for an application, you can try to build a click dummy. Using a program like Axure or Invision, you can add sections to the graphics which jump to the next page when the user clicks on or otherwise interacts with them. Complex menus, product filters or interactive maps can only be implemented in this way, however, if you put a great deal of time into them. It might be better in that case to create a focus group in which users discuss the designs. Or you can use another highly recommended method, the five-second test.

The five-second test

This works as you might imagine: You show a user a screen for five seconds and ask them what they remember about it. Which elements they have seen. What they would click/tap on. This works remarkably well – and clearly shows which elements are the most striking. And which get lost. The five-second test isn’t as unrealistic as you might think. After all, when you look at analytics, you notice that many pages of a website are only visited for two or three seconds before the users click on to somewhere else. A website or a screen of an application must make it clear very quickly what it offers, otherwise users are soon on their way again.

2. Conducting usability tests in practice

What’s the best way to go about setting up usability tests? There are all kinds of best practices, but there are no ready-made templates you can simply copy and use for every test. The test objects, topics and things available to you are too different. Ideally, you should begin with a good plan:

Usability test – basic considerations

Create a study concept/research plan

In the study concept or research plan, you set out the following points:

- Project (what it’s about in two or three sentences)

- Project staff/managers (basically who makes decisions, who implements them)

- Target audience (who should use the application)

- Test object (which interim versions can we use when)

- Topic/study purpose (what do we actually want to find out)

- Questions/working hypotheses (which suspicions/discrepancies/uncertainties there are)

As for every document, the following applies: Don’t invest too much time in nice documents. The study concept should be as clear and succinct as possible, and no more. Tips for communication within the team: Communicate insights

Conducting usability tests: Remotely or on site?

It’s also important that you establish as early as possible how exactly your usability tests should be conducted. The classic is the test in the studio, in what is known as the uselab or usability lab. Here, you have good equipment, the entire infrastructure is available, and there is almost no room for nasty surprises. But not everyone has their own usability lab. An office where you will not be disturbed during the test also works. In that case, however, you need to build in enough time to set everything up and check before the start.

The remote test is an alternative. In this test, your test subject sits at their computer and you sit at yours. You communicate online via Skype, Hangouts or another video chat tool where you can share your screen. You give instructions and observe – just like in a classical test in the lab. These tests are also known as moderated remote tests, as you and your test subjects sit down at the same time. The alternatives are asynchronous tests, which are unmoderated. The test subjects receive a guide they work through themselves. Software records their interaction with the test object and you collect their comments and evaluations with a questionnaire. This saves you the work of moderation. But you shouldn’t underestimate how much work is involved in preparing this kind of test properly. And it also takes a lot of time to evaluate the recordings and questionnaires.

Unmoderated tests can save time

In my experience, unmoderated tests only really go much more quickly if you have a lot of test subjects – from around 20 subjects, there is a noticeable saving in terms of time. And in remote tests, some information is generally lost. Even with good technology: You simply get a lot more from how a person reacts, what they think about the test subject, and the problems they have when you’re sitting beside them. I personally always prefer a test on site or in the uselab.

Usability evaluation: Formative, summative or validative?

We’re going to get a little scientific again now. When you read the literature on usability tests, you’ll often come across the term ‘usability evaluation’. This just means assessing usability. And sooner or later, you’ll happen upon the terms ‘formative’, ‘summative’ and ‘validative’. What do they mean and do you need them? Not entirely for practical purposes, but so you know what colleagues are talking about, I’ll explain them to you briefly:

Explorative (formative) I do an explorative or formative test early in the project. It’s about finding something out. You want to know, for example, whether a classic navigation bar or a mega dropdown works better for your project. Or how people generally handle configurators. In such case, you usually test prototypes – or competing applications. This kind of explorative test uncovers opportunities to give the product a form – that’s why it’s called a formative test.

Assessment (summative) An assessment test or summative test is much closer to the finished product than a formative test. Here, you are testing prototypes, or usually even parts which are practically complete. The subjects can complete tasks independently, and as a moderator, you usually just need to guide and observe. Most tests we carry out on a day-to-day basis are summative tests.

Evaluating (validative) Finally, the validation test or evaluation test is performed towards the end of the project. In this test, you assess whether the problems you observed in previous tests have been resolved. And, above all, whether the product is working as it should. In these tests, it’s good to work with metrics. They give you an objective assessment of how you currently stand in comparison to earlier versions or the competition.

If there’s no money: Hallway and guerrilla tests

The opposites of the very formal, scientifically accurate usability evaluation are the hallway test and guerilla test. The hallway is exactly where you will find the subjects for the test of the same name. You simply grab a colleague who ideally has nothing to do with the project in question and has no specialist knowledge of the world of UX or the content of your project. You then carry out an initial quick test with this subject – you shouldn’t need any more than ten minutes per session.  The guerrilla test procedure is similar. Instead of finding your subjects in the office hallway, however, you find them in the café, on the street or in the park. These methods are cheap and affordable but have their own problems, due to the lack of choice of subjects close to your target audience and an environment which is difficult to control. But it’s better to do a test with less suitable subjects than no test at all.

The guerrilla test procedure is similar. Instead of finding your subjects in the office hallway, however, you find them in the café, on the street or in the park. These methods are cheap and affordable but have their own problems, due to the lack of choice of subjects close to your target audience and an environment which is difficult to control. But it’s better to do a test with less suitable subjects than no test at all.

Distribution of roles – Who coordinates the tests?

A very important role that many people underestimate is that of the moderator, or test coordinator. As a moderator, you guide subjects through the test. Your job is to make sure that they feel comfortable. That they act as natural as possible. That they perform the tasks and know what is expected of them in the test.

The great skill here is in gently guiding the subjects but influencing them as little as possible. It sounds easy, but requires some experience before you can do it really well. Entire books have been written on the subject – this one is particularly recommended: The Moderator’s Survival Guide. As well as moderation, a second important task in usability testing is observation. In many projects, the observer and reporter is a second person. That is good because you can then focus fully on the subjects as the moderator.

One small disadvantage is that the subjects often feel a little more uncomfortable at the beginning – there are two people sitting next to them who are watching their fingers closely. If you are both the moderator and observer, then I strongly advise you to record the sessions on video. After all, in every single test, you will find yourself writing something down at the exact moment the test subject clicks on something you miss. If you have a video recording, you can always take another look later and find out exactly what was going on.

Stakeholders – Who is present for the test?

As well as the moderator and potentially the observer, other people may attend the usability test. These are the stakeholders. They are the colleagues who are working on the product and the decision-makers. The more of them are there, the better. After all, you can talk until you’re blue in the face and give just as vivid an account of the tests – but when trying to convince people that the problems you’ve observed are real and important, nothing is as effective as seeing them with their own eyes. It’s also good because you can then discuss, evaluate and sort through the observations after the test. At the end, you can then do a workshop where you reflect on how you can solve the individual problems.  But to ensure that the subjects are not unsettled by all the observers, and that the observers can talk during the test, the observers must sit in another room. A uselab will often have large two-way mirrors. The observers in the adjoining room can see the subjects through these, while the subjects can only see a large mirror. Alternatively, the image of the test object and the image of the subject and moderator is transmitted into the observation room by video. The observers must not, however, appear in the test room.

But to ensure that the subjects are not unsettled by all the observers, and that the observers can talk during the test, the observers must sit in another room. A uselab will often have large two-way mirrors. The observers in the adjoining room can see the subjects through these, while the subjects can only see a large mirror. Alternatively, the image of the test object and the image of the subject and moderator is transmitted into the observation room by video. The observers must not, however, appear in the test room.

Only the subject, the moderator and potentially the observer taking notes should be in the test room. Stakeholders have no place there. The presence of several people may in itself be disruptive. The subject may also think that these people have something to do with the product and will be reserved. And ultimately, those involved in the project find it difficult to stay calm and quiet and refrain from sniffing, laughing and, above all, asking questions during the user test.

When should I test?

Many colleagues have a knee-jerk response to the question of when you should test: Test early, test often! After all, it’s generally the case that your test results can have a bigger impact on the finished product when they’re at an early stage in the course of the project. And, importantly: The sooner you discover a problem, the less effort it takes to deal with it. If it only becomes apparent once the application has been created, designed and programmed, you have to go back many steps to deal with it.

Which methods are suitable for which phase?

As an example, the tests I would ideally conduct when relaunching a big website or a new app:

Test of the existing website:

This way we don’t just find the problems the users actually have with the site. We also see what works well and which sections and functions we should keep.

Test of selected competing sites:

It’s not about copying. You want to know what expectations the users have, what they like and what they don’t.

Card sorting for the information architecture of the new navigation:

Cards with terms are sorted into groups. This allows a general valid navigation to be developed, for instance.

Tree-testing the new navigation:

In tree testing, I let the user look for things only by clicking through the navigation. That way, I see if they understand the terms and how they are sorted. (You can find an excellent explanation of this process here: Tree Testing for Websites)

Paper prototype test of the key pages:

In this test, you give the test subjects draft pages (scribbles, wireframes or print-outs of finished designs). You ask them what they would click on and what they would expect in each case. (More details on this method: Paper Prototyping)

Click dummy test of the initial designs:

The best approach is to test with early programmed versions. However, the designs of the screens are often ready well in advance, and you can use them to create click dummies and perform tests with them.

Classic usability test with the initial programmed versions:

Only now will we do the test that most people do: The test with the initial version that the programmers have finished. This is close to the end product, of course, but late in the process.

Test with the beta version:

Shortly before the website goes live or the app is delivered, you can check whether everything is working with a final test. Whether no usability problems have arisen with corrections. It’s better that you notice if something isn’t working now than after it’s released.

Of course, you can’t implement this maximum program in every project. But it shows you where it makes sense to test. In case of doubt, always opt for earlier tests. And ideally save on detail – if you’re on a tight budget, it’s better to test with fewer subjects per round of testing but plan enough rounds of testing.

3. Recruiting test subjects

How many subjects do I need for a usability test?

I almost always only hear one of two answers to the question ‘How many subjects do you need?’:

- ‘It depends’ and

- ‘Five’.

You have probably also heard the magic number five in conjunction with usability tests. It was brought into the world by the best-known web usability expert Jakob Nielsen (see Why You Only Need to Test with 5 Users). According to Nielsen: you only need five users to find 85% of usability problems.

The classic presentation according to Jakob Nielsen: The more subjects you test, the fewer new problems you find in the usability test.

Unfortunately, however, this is too simplified, as the first answer is actually correct: It depends. After all, there’s a prerequisite for finding 85% of problems with five subjects: The probability that a user will stumble upon these problems is at least one third. What does this mean? It means that a problem needs to occur quite frequently for you to find it with just five subjects. A third of all users need to have this problem – that’s a lot. In a typical usability test, I might find 20 problems. But only two to four of these affect a third or more of all users. If I test again with five users, then I will probably observe these two to four frequent problems again, plus the ten others that I hadn’t seen before.

You can never test the entire application in a single usability test

The more often I repeat this, the fewer new findings I uncover. So you see: In every user test, you also find problems that occur more rarely. On the other hand, you might miss severe problems that don’t occur so often. And even for the frequent problems, the probability that you will find them is also only around 85%. And ultimately these figures only apply if the users use the function in question in your test at all.

It might sound trivial at first, but you can never really test the entire application in a single usability test. Hardly any websites are so small, hardly any apps have so few functions, and hardly any product has so few options for use that you can really examine the entire content and scope of its functions in a single usability test. In summary: Five subjects per test is a good guide. But when you want to test lots of functions or you have vastly divergent target audiences, you need more test subjects. And you should build in several rounds of testing in the course of the project.

Selecting test subjects

Ideal test subjects come from the target audience. So if you’re testing an application for seniors, you need seniors in the usability lab. If you’re testing a website for children, invite children to take part. Anyone working on the project, and all employees of the company whose site or app you’re testing, are unsuitable as subjects, as are family and friends. They are biased and know too much about the background. The idea of the usability test, after all, is to see how real users deal with the application.

If your target audience consists of several, very different subgroups, then try to take that into account when selecting test subjects. So in my tests, I make sure I get a balanced mix of genders, age groups and professions. You don’t actually need several users from each group per round of testing. But if you’re doing several rounds of testing, for example, you can ensure a good mix across the rounds.

4. Preparing the usability test

Preparing the test sessions

The moderator and observers are decided, the test date is in the diary, and the subjects are being recruited. What you need to do next:

- Declaration of consent: A brief (!) form to sign, confirming that the subjects consent to taking part in the test and being observed and (if necessary) recorded on video.

- Non-disclosure agreement (NDA): It’s all the better if you can leave this out. However, in some projects, it’s necessary for the subjects to agree that they won’t immediately post all the information online about the strictly confidential new prototypes. Yet you should keep the agreement brief and in plain English – not legalese. Otherwise, you’ll waste a lot of time on discussion and will create a bad atmosphere from the outset.

- Incentive payments: The term ‘incentive’ has become established for payments. Subjects receive an allowance, usually between €30 and €100. But if you’re testing a shopping website, for example, it makes more sense to give your subjects a budget. They can then use it to shop on the site. This is more fun for them and is more realistic.

The test concept/script

Simply just going ahead and testing isn’t a good idea. Although you’re sure to get something out of it, the time with the users is too valuable to leave the results to chance. It depends on:

- A test situation that’s as natural as possible being created.

- The users acting as they would outside the test.

- The results being representative of real use.

- You seeing first and foremost what the users do.

The last point is important: Inexperienced testers get to hear lots of opinions from the test subjects. They say how they like the images, which colours they don’t like or what’s recently happened to them on another website. But as a good moderator, you guide the users in such a way that they work through the tasks you give them. That’s where you will find usability problems, not in casual conversation with the user.

A must at every test session: Declaration of consent and study concept.

The most important tool for managing the sessions is the test concept, also known as the test script or guide. The test concept includes:

- What would you like to know?

- What are your test hypotheses?

- Who is taking part and how many are there?

- How long will the sessions last?

- Which tasks should the subjects solve?

- Which questions will you ask the subjects?

Important: Always begin with an easy task. The subjects need to adapt to the situation first of all. If you send them into a complex area where many people have problems to start with, their motivation drops. The risk is that subjects blame themselves and lose confidence that they can solve the remaining tasks.

The best way to involve stakeholders/observers

When others observe the test, that’s first and foremost a good thing, because they can then see with their own eyes which problems users have with their product. But this has an even bigger advantage for you: You’ve got a whole group of helpers. And when else do you have the opportunity to get executives, product managers and other bosses to work for you? Before the tests, explain to the observers how they will be conducted. And give out observation forms where the observers should note down everything they notice.

I personally think it’s even better to give out a big pile of sticky notes or index cards. An observation is written on each one. After each individual usability test, you collect the sticky notes and stick them to pinboards, for instance. They can then be arranged into themed groups and duplicates can be thrown away. It’s very important that the observers don’t disrupt the test. If only a mirror separates them from the subjects, you must impress upon them that they shouldn’t speak loudly, as the subjects might hear them and get irritated. There’s one thing they absolutely must not do: Laugh out loud. That’s extremely uncomfortable for a test subject – and for you as a moderator, as you don’t know exactly what’s going on next door.

Sometimes stakeholders want to be able to ask the subjects questions. But this must never happen during the test itself. At most, it can happen afterwards. And I personally think it’s better if the test subjects don’t meet the observers – this always feels odd to them. You can avoid this by ensuring that the observers send their questions to the moderator (e.g. by e-mail), who then provides them to the test person at the end of the session.

5. Evaluating the usability test

What’s the best way to evaluate your results?

There’s a very important tip for the evaluation: You should ideally do it straight away. Look at your notes after every individual session and correct everything that isn’t very legible, for example. Even with an amazing power of recall, after five or six sessions in a day, the individual test subjects and their specific problems will get muddled in your memory.  And at the end of the day, you should ideally have a rough look at all notes. Evaluation should take place no later than the following day. If you’ve taken my previous tip to heart and had as many stakeholders as possible there as observers, then you should ideally get together after the last test for your evaluation workshop. Yes, these test days are long and tiring. But incredibly productive. And practically everyone involved comes home at the end of the day feeling exhausted, yet very satisfied.

And at the end of the day, you should ideally have a rough look at all notes. Evaluation should take place no later than the following day. If you’ve taken my previous tip to heart and had as many stakeholders as possible there as observers, then you should ideally get together after the last test for your evaluation workshop. Yes, these test days are long and tiring. But incredibly productive. And practically everyone involved comes home at the end of the day feeling exhausted, yet very satisfied.

Documenting insights

Even when you’ve set a model example and done an evaluation workshop right afterwards – you usually can’t avoid documentation. You must pass on the information you obtained to those who weren’t there. Also, you should be able to read it again afterwards and nothing should be forgotten. You can find extensive tips here: Communicating insights. There are two standard ways to sort the problems you’ve found:

- According to the sequence in which they occur in use

- According to severity (from severe to slight problems)

You sort them the first way if the report is mainly for those who will continue to work intensively with it. It’s better to sort them the second way, from severe to minor, if you think that many people won’t read the report through to the end. And if you want to make sure that the most serious problems are really dealt with first of all – and not those which are easiest to correct.

Classification according to the seven dialogue principles

To structure the evaluation and make the reasons clear for why your observations constitute a problem, you can classify them based on seven principles of dialogue. These seven principles also appear as criteria in the standard DIN EN ISO 9241, for instance. They are the requirements for the interface between the user and system (dialogue design). They should have the following qualities:

- fault-tolerant

- suitable to the task

- controllable

- self-describing

- customisable

- supportive of learning

- meets expectations

You can find more details on the standard on Wikipedia. Ultimately, I believe the following two points are crucial in the usability test report:

Firstly: Give a suggestion for how to solve every problem.

If you are used to scientific work, you might be accustomed to doing things differently. But in practice, it’s worth its weight in gold for everyone who will continue working with your report if they get an idea right away of how they can manage the problems found. And this also shows that you don’t just talk the talk, you also have specific ideas on how to make something better.

Secondly: Don’t just point out the errors.

Note the underlying psychology of the person reading the report. This will soon read like a list of errors. And the people responsible for these errors will be the readers of the report – designers, programmers, product owners. Depending on personality, some people don’t take that so lightly. So it’s a good idea not just to write up the problems that occurred. Instead, you should also write up what worked well. This makes the report a much easier read. And not to mention: You should therefore also point out the sections that don’t need to be changed in corrections because they work well for the users. Otherwise, there is a risk of new usability problems emerging when others are being resolved.

6. In summary – usability testing that pays off

Usability tests are excellent. They offer practical and highly relevant results, are comparatively quick and easy to perform and are fun every time. If everything still isn’t going perfectly in your business, don’t worry about it. In practically every company I know, there are a few areas where there is still room for improvement. You can gradually work on getting better. The main big step is getting started with testing.

From there, the only way is up. Because as you know: Any test is better than no test at all. And with time, your tests will become more and more professional. You will get better results and the tests will be more relaxed – usability tests are always quite tiring, even for moderators and observers with lots of experience. You will find a good summary of all of this information in the post 9 golden rules for solid user testing.

Still got questions? Or do you have any other tips or experiences you’d like to share? I’d love to hear from you!